Markdown test specifications

Overview

xcdiff has recently been open sourced 🎉. It’s a command line tool we’ve been working on to aid us with our migration over to using generated Xcode projects.

Today I wanted to shine a small spot light on an interesting testing technique we employed for xcdiff - that is using markdown files to define test specifications.

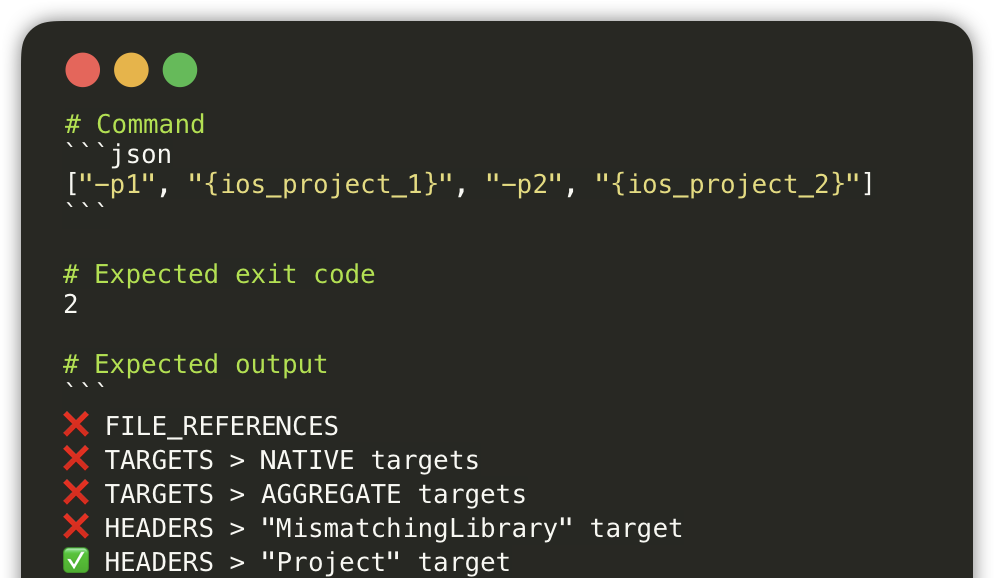

Here’s an example of what that looks like:

Please note that even though I am writing this post, credit goes to @marciniwanicki and @adamkhazi for all their hard work on this, I am merely jotting it down in blog form 🙂.

What is xcdiff?

It’s a command line tool that helps us compare Xcode projects against one another. In the context of migrating over to leveraging generated projects using tools like XcodeGen or Tuist, it provides us with a mechanism to verify what we’ve generated matches the original project we previously had.

It does so using by using a number of different “comparators”, each of which can diff a specific attribute of an Xcode project, such as “sources”, “headers”, “settings” and more.

The final results are combined into a report which can be in any of those formats: console, markdown or json.

For example here’s a snippet from a console formatted report:

xcdiff -p1 OriginalProject.xcodeproj -p2 GeneratedProject.xcodeproj -v

❌ SOURCES > "Project" target

⚠️ Only in first (1):

• Project/Group B/AnotherObjcClass.m

⚠️ Value mismatch (1):

• Project/Group A/ObjcClass.m compiler flags

◦ nil

◦ -ObjCThe report indicates that our generated project is missing a source file AnotherObjcClass.m and that the source file ObjcClass.m has mismatching compiler flags set.

The original motivation

In addition to having unit tests for xcdiff we wanted to have a suite of integration tests that exercise all of xcdiff’s components together on some real projects.

Initially, we had a few XCTest based tests written out for those integration tests:

func testRun_whenDifferentProjects() {

// Given

let command = buildCommand()

// When

let code = sut.run(with: command)

// Then

XCTAssertEqual(printer.output, """

❌ TARGETS > Native

❌ TARGETS > Aggregate

""")

XCTAssertEqual(code, 1)

}However we soon noticed a few issues with this approach:

- We were were constantly updating those test as we added more features

- Getting the multi-line strings to be a perfect match to the output was more challenging than we thought - more often than not we would miss a new line at the end!

For example, the test above is a wholistic “show me a summary of all the differences” between two projects. When we added a sources comparator, the test needed to be updated as the output now includes that new comparator’s results:

XCTAssertEqual(printer.output, """

❌ TARGETS > Native

❌ TARGETS > Aggregate

❌ SOURCES > "Project" target

""")This was somewhat manageable with a handful of those tests, but we wanted to test the output for a few different argument combinations.

--format console--format console --verbose--format markdwon--format markdwon --verbose--format json--format json --verbose

We really liked the power of those tests however we couldn’t realistically scale them further or try to introduce additional combinations of arguments.

So we started exploring some alternate options.

External files

Rather than constantly copy and paste the expected output to the test we found it was much simpler to run the command and pipe its output to a file:

xcdiff --format markdwon --verbose > markdown_verbose.md

xcdiff --format json --verbose > json_verbose.jsonUsing this approach we modified our test to read those files and use their contents to validate the command output against.

func testRun_whenDifferentProjects() {

// Given

let command = buildCommand("--format", "markdown", "--verbose")

// When

let code = sut.run(with: command)

// Then

XCTAssertEqual(printer.output, expectedOutput(file: "markdown_verbose.md"))

XCTAssertEqual(code, 1)

}

// MARK: - Helpers

private func expectedOutput(file: String) -> String {

// read file contents from a known location

}This helped us manage those tests better and reduced the size of the test code as it was no longer mixed with lengthy expected output strings!

Automating file generation

Using external files helped us scale our tests but as we did that, the first issue (keeping the outputs in sync) started getting somewhat tedious and repetitive.

xcdiff --format markdwon --verbose > markdown_verbose.md

xcdiff --format json --verbose > json_verbose.json

...

...However this process is scriptable! So we wrote a small script that ran through all our test commands to generate our files.

Scripts/generate_tests_commands_filesThis way we didn’t need to constantly figure out which commands we needed to run to re-generate the files.

Making files self describing

In order to add a new integration test we needed to manually add new test cases in code as well as a corresponding expected output file. We saw an opportunity for improvement here.

We wanted a solution that would allow us to simply add a file and that somehow gets automatically evaluated as part of our integration tests.

Iterating over a directory with files is fairly straight forward, however what we lacked was conveying all the necessary information to test. Our integration test need three pieces of information:

- The command to run

- The expected exit code

- The expected output

The output we already had, it was the content of the file itself, we just needed to find a way to convey the other two.

Our first attempt was to encode that in the file name:

<command>.<exitcode>.txt

For example:

--format markdown --verbose.1.txt

This worked! We weren’t sure if we’d be able to encode all our parameters as file names, but to our surprise it was ok.

So we updated our integration test to scan a directory called CommandTests where all those text files lived:

func testRun_commands() {

let commandTests = parseFiles(in: "CommandTests")

commandTests.forEach { commandTest in

// Given

let command = buildCommand(commandTest.command)

// When

let code = sut.run(with: command)

// Then

XCTAssertEqual(printer.output, commandTest.output)

XCTAssertEqual(code, commandTest.exitCode)

}

}This allowed us to significantly boost the number of integration tests we had - it was now a matter of adding a new file to a directory whenever we needed a new one, or running the generate script to update the existing one.

Markdown files

As we introduced more comparators and corresponding tests, we noticed that there was room for yet some more improvements.

Having commands and exit codes encoded in the filename works well for our test code, just sadly not for us mortal humans. We found that reviewing those files wasn’t as smooth as we would have liked them to be. We needed to inspect the file name (which could be fairly lengthy) separately from the file content to see what the test case was.

So we decided to try moving that information into the file contents, such that now the contents contain all three pieces of information needed for the test.

Now we had to think of a format of how to structure the file internally.

<command>

<exit code>

<output>

Our first thought was fairly simple:

- line 1: command

- line 2: expected exit code

- line 3 - End of file: expected output

However seeing we could craft this file to be any format we wanted, why not make it even more readable by giving it some structure, more so, some structure that renders well in our editors and on GitHub!

Thus the markdown idea! We structured the file in the following format:

# Command

```json

<JSON REPRESENTATION OF THE COMMAND>

```

# Expected exit code

<NUMBER>

# Expected output

```

<CONTENT OF THE OUTPUT>

```For example:

# Command

```json

["--foobar"]

```

# Expected exit code

1

# Expected output

```

ERROR: unknown option --foobar; use --help to list available options

```This of course didn’t come fore free, we had to write a custom parser using Scanner- but we thought was worth the effort given the final results.

Final workflow

After many iterations we now have the following workflow:

To add new test cases:

- Update a

jsonfile that contains a list of all the commands we’d like to run integration tests forgenerated_test_commands.json: This file hosts a list or arguments which the test generator uses to generate various command combinationsmanual_test_commands.json: This file hosts commands we manually add

- Run the test generator

Scripts/generate_tests_commands_files.py- This reads the

jsonfiles to generate our markdown test specifications to theCommandTestsdirectory

- This reads the

- Review the generated files

For newly added commands, the review step helps both the author and the reviewer see a real example of the output without them needing to build and run xcdiff.

For existing commands, regressions or changes to the output format are easily spottable as they will appear in the git diff.

Lastly all of the markdown files are run through the CommandBasedTests integration to ensure it reflects reality.

Future improvements

There’s still room for more improvements of course, for example instead of using json:

# Command

```json

["--format", "markdown", "--verbose"]

```It would be nicer to write out the command as users of the tool would:

# Command

```sh

xcdiff --format markdown --verbose

```Additionally, all our integration tests appear as a single test in test case in XCTest and would be great to see if there’s a way we can hook into the the test runners to make each file appear as an individual test case in the test inspector.

But those all could be made as the need for them arises in the future.

Conclusion

What started out as a means to help us reason about our integration tests has also doubled as a source of live documentation for xcdiff’s output that is guaranteed to stay up-to-date!

It by no means is a technique that should be used for all use cases, nor should it be used as a means to overlook a good suite of unit tests. It is however is an interesting technique that could bring some additional value that is worth considering.